This post is part of a series – a symposium – on AfL.

- Part one of the series is by by Adam Boxer here. In it he sets the context of the following posts.

- Part two is by Rosalind Walker here. She discusses the nature of school science and implications for the classroom.

- Part three is by Niki Kaiser here. This post explores concepts, threshold concepts, misconceptions, knowledge and understanding.

- Part four is by Deep Ghataura here. It is about the validity of formative assessment.

- Part five is by Ben Rogers here. It is about the assessment and improvement of writing in science.

- Finally, Dylan Wiliam has generously taken the time to respond with his thoughts.

The intention with this post is to try to think quite pratically about assessment in science and draw on some of the ideas in the previous posts. I hope my thoughts are of some use.

Plenty has been written about formative assessment and we are lucky in the STEM subjects that this has been a particular focus but I still think that Adam Boxer is right. To some extent, this work has focused on science examples of generic practice, rather than an approach that specifically takes into account the structural features of teaching and learning in science. Partly for this reason, and partly because I feel I’m offering my personal experience, this post is going to leave aside that literature and sketch out how I think maybe formative assessment in science might work.

My experience, over many years, is that it’s fairly straightforward and pretty instinctive to ask a few questions and get a vague gauge of whether your class are lost, or not. Unfortunately, whilst that does mean you can go back and have another go if you really have lost them, it frequently ends up with a set of books, a piece of work, or an end of topic test…

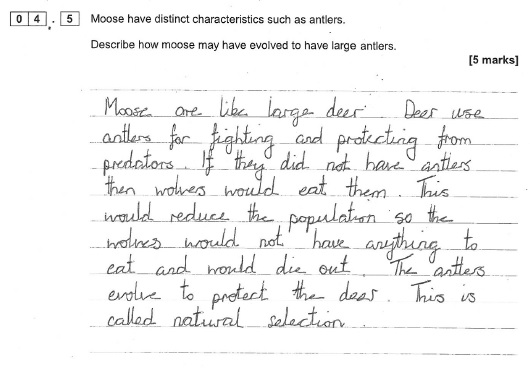

…that looks like this

These are the sorts of answers it is almost impossible to unpick. You can’t give feedback “to make adjustments to the students’ learning”. There is no way children can do any useful self- or peer- assessment – how many marks do you think they would give? Children writing these answers probably do have some partially-correct schemas. I bet you could tease a good answer out orally. But whatever led up to this assessment hasn’t prepared them for it in a way that makes their answer useful, to you, or to them.

I think a non-specialist might not appreciate this – just as Ben Rogers found. In fact, I think plenty of science teachers might try to take these answers and “make adjustments” but I think it is a peculiarity of science that it is possible to have a bunch of appropriate key words and statements that are all true, in a grammatically correct arrangement, which sounds moderately convincing, but is totally wrong! If you want to get to a decent answer you don’t want to start from here!

Learning in science builds up from declarative knowledge, through exemplars, to inferences about unfamiliar contexts, as Rosalind Walker has set out so clearly. However, I would add that descriptions and explanations in science often have a requirement for precise structure as well as accuracy. To achieve this, both the declarative knowledge, and the exemplars, need to be totally solid. That is one driver of formative assessment in science. But then, although it makes sense to move on to inferences because there is some possibility that children will produce work that allows us to give useful feedback, considerable scaffolding is often still needed to help them get the structure right. Hence, assessment is inextricably linked to planning.

So, the drivers of formative assessment in science are (1) checking recall of declarative and procedural knowledge, and exemplars (2) identifying and addressing misconceptions or missing links within that knowledge (3) providing feedback to improve inferences.

I also think formative assessment in science mainly consists of three key elements, though they are not a direct match to the three drivers. These are whole class questions; individual oral questions; and a keen sense of “What will good look like?”

Let me see if I can put these ideas together in a way that’s helpful.

Whole class questions:

Up to you whether you use mini-whiteboards, some more sophisticated edtech like Plickers or Kahoot, or quick paper and pencil mini-tests (again possibly with an edtech element such as Quick Key, or with you or the children checking them). There are pros and cons to each. I tend to use mini-whiteboards. Mr Barton’s approach for maths is here (all three posts are well worth a read).

This sort of assessment may be retrieval practice of declarative knowledge and exemplars, with feedback for the teacher and children about what has, and has not, stuck. Traditionally the declarative knowledge and exemplars have been mainly in the teacher’s head but I think the development of more formal knowledge organisers makes it is easier to achieve comprehensive coverage. At a pinch, an experienced teacher can extract this on the fly from a textbook, or even an exam spec, but having it set out properly is definitely better, particularly for the children to use themselves.

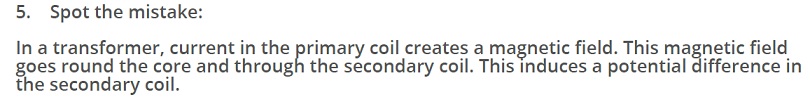

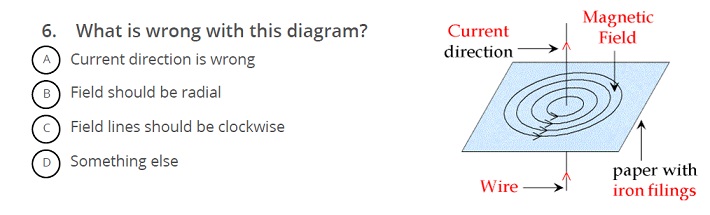

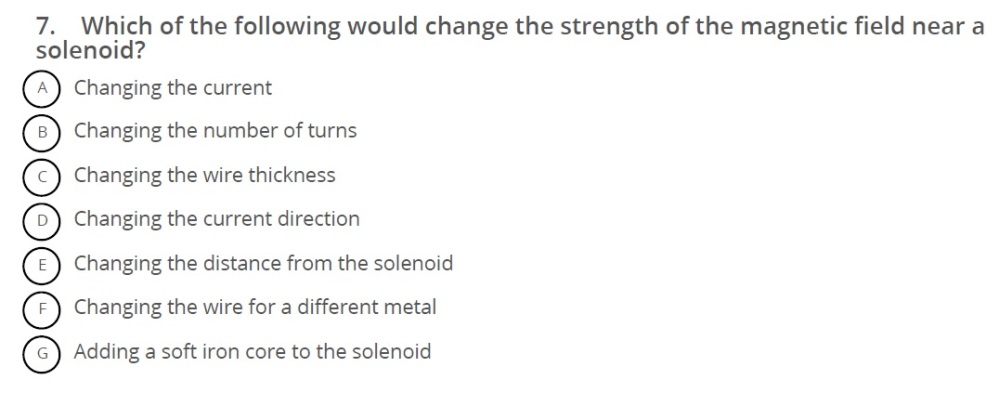

Whole class questions can also be used to help identify and address typical errors and misconceptions. This requires carefully written questions, sometimes about declarative or procedural knowledge, sometimes about exemplars, and sometimes about inferences. Harry Fletcher-Wood has written extensively about this. Multiple choice is the obvious format but I also think ‘spot the mistake’ works well. Here are three (quite tough – you’re science teachers!) examples:

We’re not there yet with a really good question bank resource in KS3/4 science. However, the Diagnostic Question web resource is a decent start (try ordering the questions by “Most Quiz Inclusions”) and there is other work to build on like the University of York Science Education Group EPSE project that Rosalind and Ben both referred to in their blogs.

We’re not there yet with a really good question bank resource in KS3/4 science. However, the Diagnostic Question web resource is a decent start (try ordering the questions by “Most Quiz Inclusions”) and there is other work to build on like the University of York Science Education Group EPSE project that Rosalind and Ben both referred to in their blogs.

Individual oral questions:

The problem here is that if you are asking questions as formative assessment, you only get one (or two) responses, and it’s easy to mistakenly generalise to the whole class. If based on the children with hands up then this is almost certain to be misleading. Tom Sherrington’s suggestion to think about a class in groups is helpful. Be subtle about it or you’ll reinforce expectations and piss the kids off, but to dip-test understanding, ask children mainly from the bottom two sections of the table.

My main use of individual questions, though, is to support children’s thinking to help with understanding exemplars, or to model and provide feedback on inferences:

Exemplars:

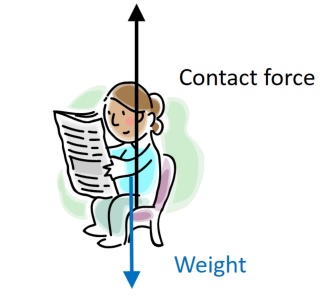

e.g. draw this on the board

and ask questions like:

-

- Is this force diagram correct?

- What would happen if the forces were like this?

- If the forces were like this would there be a resultant force?

- What (always) happens when there is a resultant force acting on an object?

- What should the force diagram look like?

Inferences:

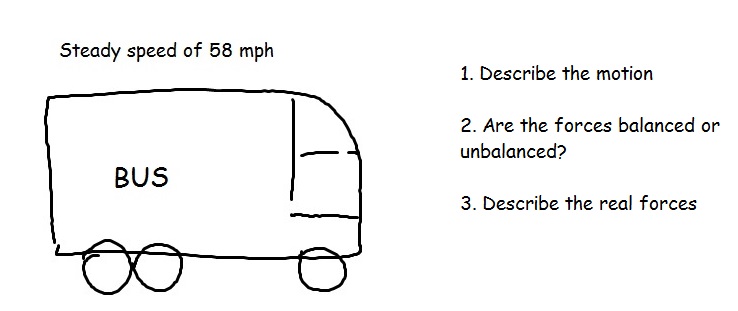

e.g. draw this on the board and ask children to “describe and explain the forces acting on this bus”.

“Sir, there’s a force and air resistance that keeps it moving at a constant velocity”

“That sounds like you might be thinking correctly but your answer isn’t clear. What’s the first thing you need to say?”

“About the motion?”

“Yes, describe the motion – go on then.”

“The bus has a constant velocity because there’s a force keeping it moving and air resistance.”

“Make it simpler – just describe the motion”

“The bus has a constant velocity”

“Yes, what’s next?”

“So there’s a force keeping it moving and air resistance”

“No, look at the board, what’s next?”

“Oh – I have to say if the forces are balanced?”

“Yes, do that now”

“They’re balanced, sir.”

“That’s better. Now can you do the whole answer?”

“The bus has a constant velocity, so they’re balanced.”

“What’s balanced?”

“The forces?”

“So, give me the whole answer.”

“The bus has a constant velocity, so the forces are balanced.”

“And the last bit?”

“The real forces?”

“Yes”

“The bus has a constant velocity, so the forces are balanced, so the forwards force is the same size as the air resistance.”

“That’s a perfect answer now… James (a different child – probably one more likely to need more support, or just not paying enough attention), can you also give me a perfect answer to the same question?”

Note how long this sequence is. I think we do have to operate at this level of questioning in science because this is the level of precision required. Look at Niki Kaiser’s modelling of the thinking required to determine the formula of magnesium sulphate. As @DavidDidau has put it, we have to make the implicit, explicit, because only then can we provide feedback at the level needed.

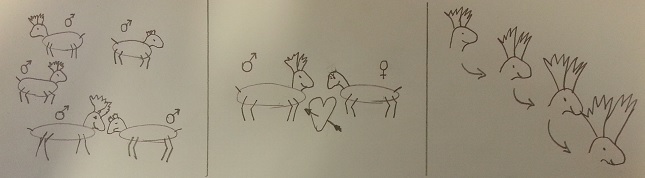

How do we actually cope with inferences, or applying procedural knowledge, in an unfamiliar context? If we are experts then we are capable of picking out the deep structure in a problem, and answering in a way that includes the declarative knowledge, even if we are making up the superficial structure of the response off the cuff. This, I think, is when we have built, crossed (and perhaps re-crossed many times) Niki Kaiser’s level of threshold concepts. Novices just can’t cope with this. They need most of the superficial structure to come from long-term memory (or initially from scaffolding), otherwise they produce answers like the one about the antlers at the start of this post. Individual questioning is one way to provide that scaffolding and help them to reach the point where at least some of that scaffolding can be withdrawn.

What will good look like?

However, to do this through individual questioning, or to scaffold in any other way, the critical question is “What will good look like?” As a teacher, if you aren’t crystal clear about this then you just end up with differentiation by outcome, with any struggling children producing mainly garbage. Almost all my work these days is with trainee teachers and this is where their planning usually goes down the tubes. Trying to teach to a learning objective such as “Be able to state Newton’s 1st Law” is obviously hopeless since that’s just a single sentence that needs committing to long-term memory. “Be able to apply Newton’s 1st Law” is what is usually meant but that’s also far too vague. What is needed is clarity about the declarative knowledge, and a really consistent superficial structure that can be translated from the exemplars to the inferences. Once that is clear, there is a much better chance of teaching coherently, and providing suitable scaffolding. Get this right and instead of impossible answers about the evolution of moose, you’ll hopefully have something you can work with.

My favoured forms of scaffolding are:

Key words

Describe how moose may have evolved to have large antlers.

Use these key words

variation genetic differences selection pressure survive reproduce passed on many generations

Questioning – “if they can say it they can write it”

See my example above about the bus

A writing frame – make sure they use whole sentences so they can be transferred to another inference

| Explain why there is variation in antler size | |

| What happens if there is a selection pressure? | |

| How long does it take? |

Diagrams then writing

Diagrams you provide or they create? In this case a little storyboard about evolution of large antlers.

Jumbled sentences

| Because of selection pressure | Over many generations antlers get bigger |

| There is variation in antler size | Are more likely to survive and reproduce |

| The genes for large antlers are passed on | Moose with larger antlers |

| Due to genetic differences |

Cloze paragraph (with or without key words)

There is _________________ in antler size due to ____________________ Because of _____________________ moose with larger antlers are more likely to _________________ and __________________. So the ______________ for large antlers are __________________. Over many _________________, antlers get bigger.

If the scaffolding is tight (like the last two examples above) then assessment is about checking for correct outcomes by asking individuals. Again, use of in-class groupings is helpful. You can scatter the checking around the class but include some of the children most likely to be wrong. And if you get anything other than confident responses I think you need to ensure they’ve got a good version in their books. With some classes/children you might need to have a clean copy to stick in. The next step is to identify the exemplar bits and the inference bits – the bits of superficial structure to keep, and those that will change as the context changes. Highlighting is good for this and you can do this as a whole class with them suggesting, which is another way to provide immediate feedback.

However, the last bit of the process is the thing I love the most in science teaching – right up there with the “Ah!” moments with crossed polaroids, and blowing through straws to model resistors in parallel. With looser scaffolding (either straight off or building new inferences on the tighter scaffolding above) you get responses that are good enough for quality feedback and now grabbing children’s work and improving it on the hoof is possible. I have a visualizer and often get students to work on mini-whiteboards, but an iPad linked to the projector is an option, or a webcam, or the old-skool solution of quickly copying out on your board works just fine. The trick is to take the answer and edit so it matches “What good will look like”. Model your thinking as you go, particularly the comparison between exemplars/inferences, the stating of declarative knowledge, and the need for short, clear sentences.

To my mind, this is what ‘sharing learning objectives’ means in science. It doesn’t mean telling them (or writing it on the board or whatever); it means showing them ‘what good will look like’ (WAGOLL if you must), and building that image up bit by bit.

Now – rinse and repeat. If you move on before exploring a range of inferences, only the highest-achieving children are likely to be able to retain much. But if they can explain how moose have evolved large antlers then they can have a sensible go at how polar bears evolved thick fur, desert foxes evolved big ears, giraffes long necks, cheetahs amazing speed, woodpeckers indestructible heads, monkeys prehensile tails, or whatever. Scaffolding now comes from their own work and, eventually, from their long-term memory.

I hope some of these thoughts are useful. To my mind, the processes of planning and assessment are really two sides of the same coin. Effective formative assessment in science is about understanding the importance of having something you can actually work with; you get that through good planning; and the good planning comes from clarity about the special way knowledge is structured in science plus, of course, the misconceptions and subtleties around each individual topic.

This draws it all together really nicely, thank you. The moose/deer example clearly exhibits Adam and Rosalind’s starting point that feedback must be subject-specific and steeped in procedural knowledge or else is futile.

Your strategies for forms of scaffolding are accessible and recognizable and it is good to see them all in one place. I am sharing the whole Symposium with my colleagues. This post, in particular, meets us where we are at as a department and gives practical help as we transform task design within our lessons in order to get information to inform planning. Underpinned with ideas such as Threshold Concepts, writing to develop argument and select relevant facts, and keeping an eye on validity, I think we will find much of direct usefulness and inspiration from this collection of blog posts.

Hi – thanks for the blog, agreed with the other comment that this symposium has been incredibly useful in planning how to move feedback forward for our Dpt.

I have a question….

Do you know of a good word or phrase for “teaching or re-teaching that happens in response to student work”?

I feel like it’s more than just ‘feedback’ or ‘whole-class feedback’ because the emphasis goes beyond giving feedback specifically on the marked task, to actually re-teaching what students needed to know to succeed on that task – re-teaching/addressing the underlying knowledge or skills students needed.

For example, if the teacher marking that question about evolution of moose had decided, “hold on, I need to go back and re-teach how to answer 5-mark questions on evolution”.

It will be well planned and may be resourced with multiple activities for example teacher (re-)explanation, modelling, comparison/analysis of better and worse answers, student practice with scaffolding, perhaps culminating in the student re-attempting similar work to the original task (which will hopefully now be better).

Any ideas?

Thanks,

Tom